Prepare for the challenges of tomorrow, today. Discover how to design cybersecurity strategies that evolve along with your generative AI. An essential roadmap for CISOs at the forefront of technology innovation.

Every week, new examples are presented that highlight the revolutionary potential of AI in solving global challenges, such as climate change.

However, there are also disturbing warnings about the potential risks to human society from this technology.

Amid this constant flow of information, CISOs are in dire need of concrete, practical guidance on how to establish robust AI security measures, thereby fortifying their organizations’ defenses.

We cannot overlook the most prominent and insightful voices within the technology community when they emphasize that addressing risks proactively must be a priority global objective.

A key moment in this regard ocurred on May 30, 2023, when the Center for AI Security issued an open letter that gathered signatures from more than 350 renowned scientists and business leaders.

This document emphatically warned about the potential extreme dangers that AI could bring if not adequately addressed.

Fearing the worst may actually be a distraction from dealing with the dangers of AI

As many media outlets have highlighted, worrying excessively about worst-case assumptions could actually become a detrimental distraction from confronting the real risks that AI currently presents.

These tangible risks include aspects such as internal bias in systems and the proliferation of false information, situations with which we are already dealing.

A recent example of these challenges made headlines when it was discovered that an AI-generated legal brief included fictitious cases in its content.

Faced with this reality, the following question naturally arises: “How can CISOs strengthen AI security in their respective organizations?”

CISOs need to support AI tools and understand them, not ban them.

Over the past decade, corporate IT security experts have experienced firsthand that the strategy of banning certain programs and devices tends to backfire, potentially even increasing organizational risk.

When an application or solution is seen as effective, or when the tools approved by the company do not meet all the needs and desires of users, they find ways to retain the tools they are most comfortable with. This, in turn, gives rise to the shadow IT phenomenon.

Considering that ChatGPT aquired more than 100 million users in just three months, it seems clear that similar generative AI platforms will soon be firmly entrenched in users’ work routines, if they are not already.

Imposing a ban on the enterprise could generate a “shadow AI” scenario, which could present even greater risks than any previous attempt at stealth implementation or covert use.

Restricting the use of Artificial Intelligences would affect productivity

Many companies are embracing AI as a means to improve productivity, which would make it considerably more difficult to restrict its use.

Therefore, the task ahead for CISOs lies in providing employees with access to AI tools backed by sensible policies for their use.

Although examples of such policies have begun to circulate online, especially for prominent language models such as ChatGPT, and advice has been offered for assessing the security risks associated with AI, standard approaches have not yet been established.

Even reputable organizations such as the IEEE have not addressed this issue comprehensively. Although the quality of online information is constantly improving, it is not always reliable.

Therefore, any organization seeking to adopt security policies for AI must proceed with very careful and meticulous selection.

Analyzing AI security policy from four essential perspectives.

Considering the aforementioned risks, it is clear that preserving the privacy and integrity of enterprise data emerges as a primary goal in AI cybersecurity. Therefore, any policy that a company establishes must, at a minimum:

1. Prevent the disclosure of confidential information on public AI platforms or third-party solutions that are not under the company’s domain.

“Gartner has stressed the need for, until there is greater clarity in this regard, companies to guide their employees to handle information shared via ChatGPT and other public AI tools as if they were disseminating it on a public platform or social network,”The ICT research and consulting firm said in a recent statement.

2. Do not ‘push the limits’

Be sure to maintain well-defined separation rules for the various categories of data, ensuring that personally identifiable information and any data subject to legal rules or regulations are never amalgamated with information that can be shared publicly.

This could involve implementing a data classification structure within the organization, if one is not already in place.

3. Verify and validate all information produced by AI platforms, ensuring its veracity and accuracy.

Companies face a significant risk in disseminating AI-generated results that are demonstrably false, which could have a disastrous impact both financially and on the corporate image.

It is imperative that platforms capable of generating citations and references to sources be required to do so, with rigorous verification of such references.

In addition, every assertion in AI-created content should be subject to close scrutiny before use.

Gartner stresses that although ChatGPT may appear to perform highly complex tasks, it lacks understanding of the underlying concepts and merely generates predictions. It is therefore vital that any AI-generated information undergoes a thorough evaluation before it is used in any context.

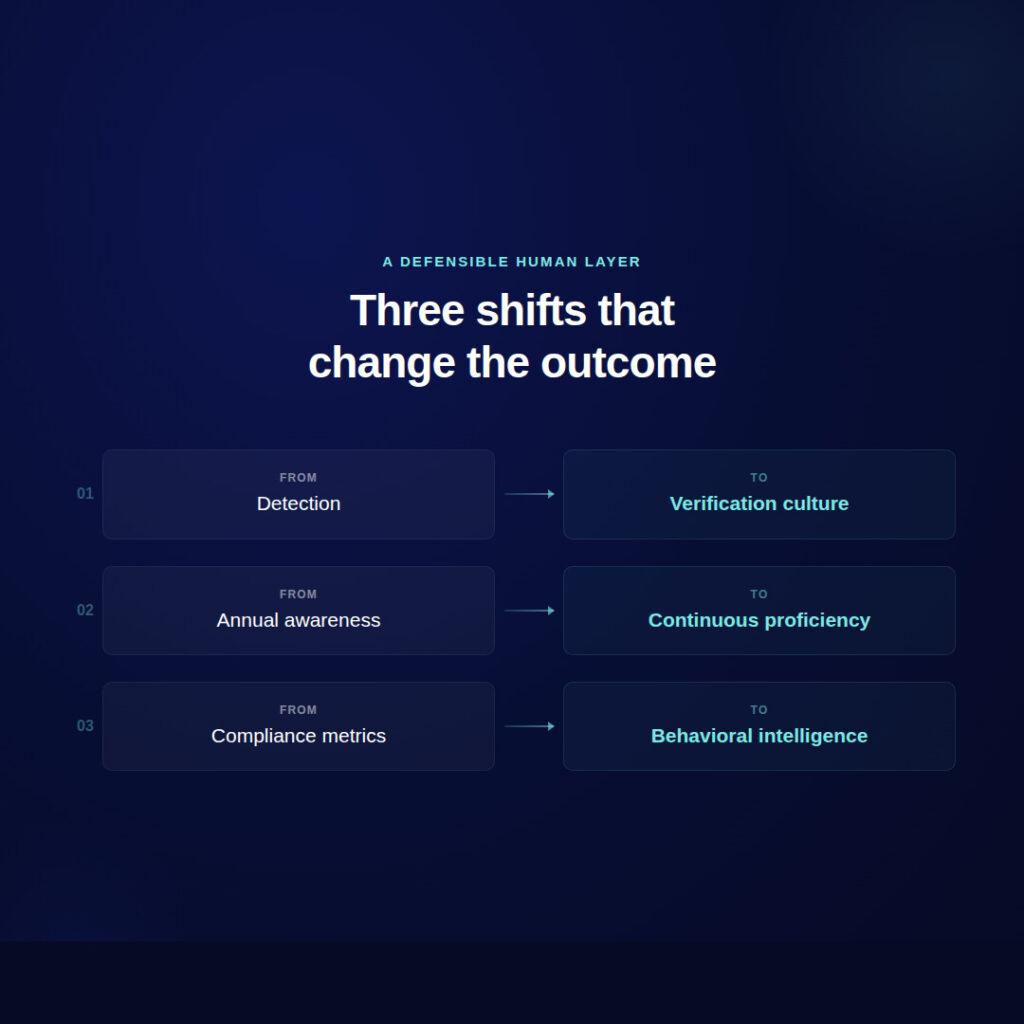

4. Establish a ‘zero trust’ model

In managing the risks associated with user, device, and application access to the company’s IT resources and data, the “zero trust” approach is a sound strategy.

As organizations find themselves grappling with the blurring of the conventional boundaries of their business networks, this concept gains more and more followers.

While the ability of AI to emulate trusted entities poses an undeniable challenge for zero trust architectures, it ultimately underscores the importance of exercising rigorous control over untrusted connections.

The AI-driven evolution of threats makes the vigilance of the “zero trust” approach truly crucial. In the ever-changing scenario of AI, organizations must maintain a proactive and robust stance on their cybersecurity strategy, considering both technological advances and emerging risks.

Choosing the right tools

Policies aimed at safeguarding AI security can be supported and enforced by advanced technology.

New AI tools are under development to detect AI-generated hoaxes and plots, as well as to identify plagiarized text and other cases of misuse.

Today, extended detection and response (XDR) solutions can already be used to monitor anomalous behavior in enterprise computing environments.

Leveraging the power of AI and machine learning, the XDR processes massive volumes of telemetry data, enabling it to effectively monitor network regulations on a large scale.

In addition,other categories of monitoring tools, such as security information and event management (SIEM) systems, application firewalls, and data loss prevention solutions offer opportunities to manage web browsing and software usage by users.

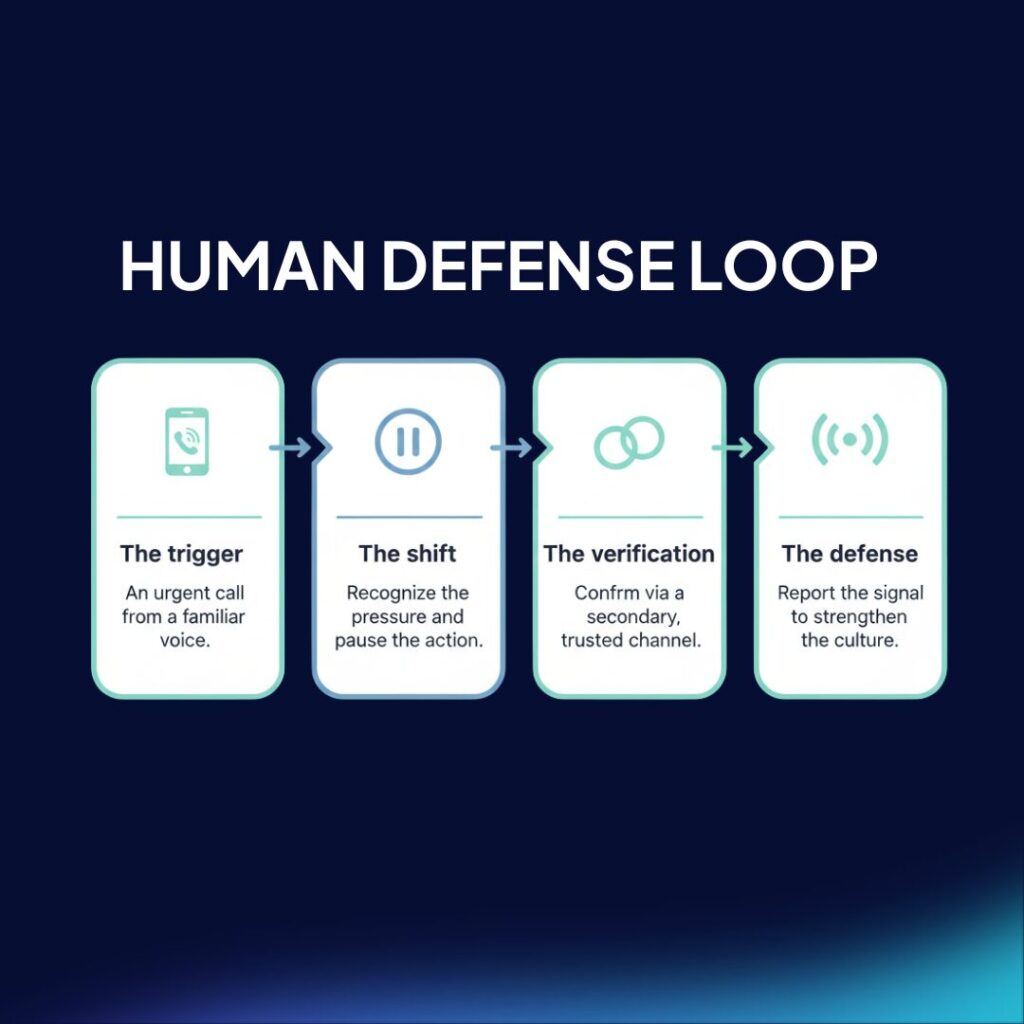

In this area, the following is of great importance to Cybersecurity Awareness.It plays a fundamental role in companies. Human failure accounts for 88-95% of data breaches . The employee is often the weakest link in the chain, exposing the company to high recovery costs, reputational damage, and social consequences.

A company’s employees handle a large amount of sensitive data. The goal of cybersecurity awareness is for employees to become a human firewall against cyberattacks.

But how can this be achieved? With Cybersecurity Training and Awareness, so that each employee has individual responsibility for protecting the company’s most sensitive and critical information.

AI is here to stay

In short, we are at the dawn of an exciting, challenging story in an AI-driven world.

While the future is still uncertain, one fact remains undeniable: AI is here to stay, and despite the concerns it raises, its potential is staggering if we build and use it with care.

As time progresses, we will see AI itself emerge as an essential tool to combat misuse of its own technology.

Today, however, the best defense is to take a clear and informed approach from the outset.

Faced with the countless possibilities and challenges that AI has in store for us, it is essential that all stakeholders, from companies to cybersecurity experts, take a responsible and committed stance.

With one eye on the future and the other on prudence, we can forge a path to security excellence in a world fueled by artificial intelligence.